How the EEOC’s New AI Guidelines Affect You

New guidelines have been issued by the EEOC (US) around using AI tools during the recruitment process. Here’s how they effect you.

Tl;dr: employers are being encourage to thoroughly investigate the technology they use.

Disparate Impact

These guidelines are concerned with AI recruiting tools causing inadvertent discrimination and bias, legally refereed to as disparate impact.

Disparate impact discrimination occurs when employers using neutral tests or selection procedures that have the effect of disproportionately excluding persons based on race, colour, religion, sex or national origin.

Test or selection procedures must be job related and consistent with business necessity.

If that’s confusing, here’s an example of disparate impact discrimination:

Candidates must lift 40kgs of weight in an interview for a receptionist role. This test is screening out those with physical disabilities. Because this test is not relevant to the role and is unfairly eliminating candidates it is disparate impact. If the same test was used in an interview for a warehouse worker, it would not be disprate impact as lifting heavy items is one of their duties.

These guidelines are targeted towards tools like AI video screening which may screen out those with speech disabilities or conditions like Moebius syndrome or; AI resume screening which could disproportionately effect those whose second language is English.

Employers v Software Vendor

So, if disparate impact discrimination is occurring, who is liable – the employer or the software vendor?

The EEOC says that in most cases the employer can be held responsible for the direct actions or implications of their tools.

Employers therefore need to carefully asses the AI tools that they are using. Inquiring with the vendor about what types of biases may be present is an important step, but employers should also make their own independent assessments. This is important to do as employers are still considered liable if the software vendor provides false information about the tool (knowingly or unknowingly).

If you do discover that the tool is creating discrimination you ought to take steps to reduce the impact, or stop using the tool altogether.

How to Assess

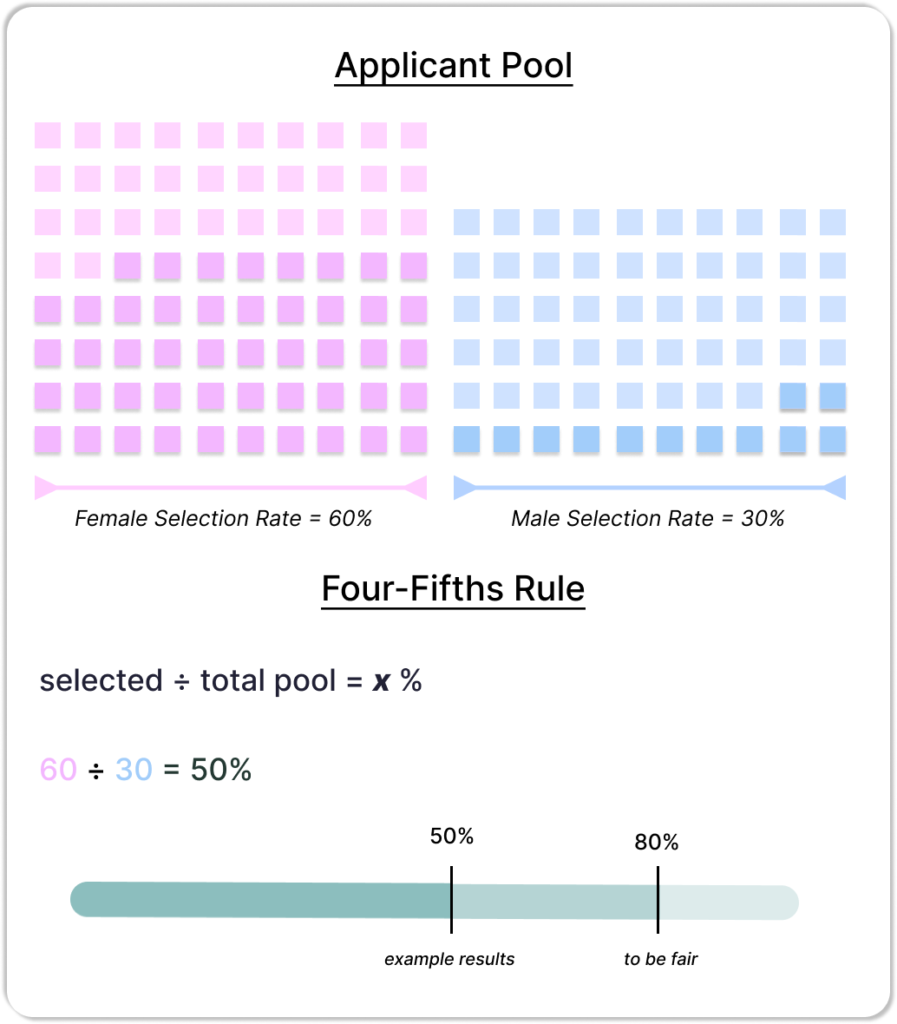

The EEOC recommends that employers utilise the four-fifths rule. This is a general rule of thumb that determines whether the rate of selection for one group is substantially different to another. If your selection rate is less than 80%, there may be bias in your selection process. Note that the US Courts have agreed that there are some cases where the rule is inappropriate, but it is still a good rule of thumb.

The selection rate is the proportion of candidates who are selected (hired, promoted etc). You can determine the selection rate by dividing the number of those selected, by the number of candidates in the group.

The four-fifths rule states that the selection ratio of a group that is less than four-fifths (80%) of the majority group shows evidence of disparate impact.

Here’s an example:

Sarah is hiring a new Product Manager and using AI to screen the initial CVs.

There is 80 female applicants and 40 males. The AI approves of 48 of the females and 12 of the males. Females have a selection rate of 60%, and males a selection rate of 30%. To work out if the AI passes the four-fifths rule, we divide 60 by 30, which gives us 50%. As this is under the needed 80%, we can say that there is disparate impact in the selection process.

The Future

Many countries are begining to look at the implications of AI in hiring.

In Australia the Merit Protection Comission has also released guidelines as a result of the government using AI tools to hire, and then overturning those decisions. We’re likely to see more guidelines, and soon legislation put in place to protect worker’s rights and ensure AI tools are not causing employers to violate legislation.

If you’re wondering if attract.ai can cause disparate impact, the answer is no.

We use AI to help uncover candidates and present them to you, using complex algorithms so you always have a random selection of high quality results that you select from. We’re big believers in keeping humans in the recruitment process, and also disclose the gender bias within each individual AI model we present to you.

We’d recommend asking your software vendor questions about bias, evaluating your tools regularly using the four-fifths rule and being sure to keep up to date on any new developments from your relevant fair work department.